Overview

Pharo is a modern, open source, fully-featured implementation of the Smalltalk programming language and environment. Pharo is derived from Squeak, a re-implementation of the classic Smalltalk-80 system. Whereas Squeak was developed mainly as a platform for developing experimental educational software, Pharo strives to offer a lean, open-source platform for professional software development, and also a robust and stable platform for research and development into dynamic languages and environments. Pharo serves as the reference implementation for the Seaside web development framework.

Persistence

In-Image persistence

If your application will only ever run in a single image then it is possible to use in-image persistence. A suitable option here is SandstoneDB - load using the Catalog Browser in Pharo. For more information see the Seaside tutorial at Hasso-Platter-Institut.

SQLite

SQLite is a self-contained, high-reliability, embedded, full-featured, public-domain, SQL database engine. SQLite is the most used database engine in the world.

It is easy to use SQLite as your persistence engine. The one thing that may confuse is the need to add a soft link to the sqlite library into the Pharo image. On a MacOS system this may look something like (in .../Contents/MacOS/Plugins) :-

lrwxr-xr-x 1 rpillar admin 25 9 Nov 22:22 libsqlite3.dylib -> /usr/lib/libsqlite3.dylib

So this is possible :-

| db name rs row | db := UDBCSQLite3Connection on: '/Users/rpillar/temp/test.db'. db open. rs := db execute: 'select first_name from contacts'. row := rs next. name := row at: #first_name. Transcript show: 'My name is : ', name. rs close. db close.

Note - in Pharo7 (and also in other versions possibly) the location of the SQLite library can be set using the Settings tool -> Databases link.

XML

For the StockMAN app (see the Seaside Examples page) there is the need to process responses from EBay that are made up of XML data. Here are some brief (and possibly incomplete) notes about how to parse XML in Pharo.

Example data

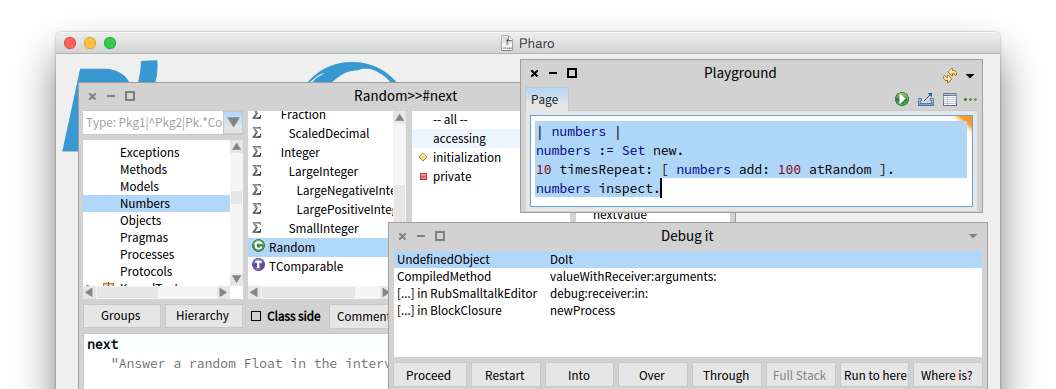

With the Pharo playgound and inspector it is possible to explore this data using methods in the XMLDOMParser class. These are invaluable tools to explore classes / methods that may be unfamiliar.

doc := XMLDOMParser parse: xml. result := doc nodes elementAt: 'GetCategoriesResponse'.

The result variable is an XMLElement object and we can use its methods to examine and iterate over the XML data. Note :- the prettified XML shown above when parsed using the XMLDomParser class may also include the whitespace as items in the collection objects.

"does this element have lower-level elements" result hasElements. "what are those 'immediate' element names - an XMLOrderedList class -> a Collection subclass." result elementNames. "an element may have nodes - an XMLNodesList class. A XMLNodeList class has a 'collection' that represents the nodes / elements at various levels in the XML structure." "we can check each item in the collection - is it an 'element'" ( result nodes collection at: 2 ) isElement. "what is the elements name" ( result nodes collection at: 2 ) name. "is the element a content node - does it contain data" ( result nodes collection at: 10 ) isContentNode. "does the element contain lower-level elements" ( result nodes collection at: 2 ) hasElements. "if this 'node' hasElements (is true) then we can look at its nodes - using the 'nodes' method" myNodes := ( result nodes collection at: 10 ) nodes.

It is then possible to process the XML to retrieve the appropriate data.

doc := XMLDOMParser parse: xml. result := doc nodes elementAt: 'GetCategoriesResponse'. "get the CategoryCount element value" count := ( result nodes elementAt: 'CategoryCount' ) contentString. "a collection of the Category nodes that we want to look at" data := ( result nodes elementAt: 'CategoryArray' ) nodes. "collect the data you want - we know which elements we want so we can name them" data collection do: [ :e | Transcript show: ( e nodes elementAt: 'CategoryName' ) contentString; cr ].

In terms of the XML example (given above) in order to get the category data that we want to store we can do something like the this.

oc := OrderedCollection new. data collection do: [ :e | dict := Dictionary new. dict at: #CategoryName put: ( ( e nodes elementAt: 'CategoryName' ) contentString ). dict at: #CategoryId put: ( ( e nodes elementAt: 'CategoryID' ) contentString ). oc add: dict. ].

General Notes

Exception Handling

If you try and perform an piece of code that

[1 / 0] on: ZeroDivide do: [ :e | Transcript show: 'You have an error' ].

Place the code that might throw an exception in a on: do: method state the exception(s) that you want to handle followed by a block that (takes the exception as an argument) and specifies

To return a

[1 / 0] on: Error do: [ :e | e return: 2 ].

[1 / 0] on: Error do: [ :e | e resume ].

For more helpful stuff see this

Dates

The processing of dates in any programming language / environment can be difficult - here are some examples using Pharo :-

'21/112021' asDate.<<21 November 2021>> '21 September, 2021' asDate.<<21 September 2021>> '2021-11-21' asDate.<<21 September 2021>> '11/21/2021' asDate.<<21 September 2021>>

It should be noted that the last example above uses the format mm/dd/yyyy. If we tried to process a date as dd/mm/yyyy then we get an exception. To set the date pattern :-

Date readFrom: '21/11/2021' readStream pattern: 'dd/mm/yy'.<<21 November 2021>>

`For other date patterns see the class comments for the DateParser class.

Example Classes

A few notes about some Pharo classes that I have created to assist in the wider development of other applications.

CT-CSV

A small class to load a CSV file (with headers). Data is stored as an OrderedCollection containing a set of dictionaries - one for each line -> keys are the header values and therefore are assumed to be the first row in the file.

Methods are provided to load data from a csv file and write data to a csv file.

An additional method has been added to the Dictionary class to facilitate the process :-

collectKeysAsStrings: aBlock "Evaluate aBlock with each of my keys as the argument. Collect the resulting keys as strings into a collection. Answer with the new collection." | index newCollection | newCollection := Array new: self size. index := 0. self keysDo: [ :each | newCollection at: (index := index + 1) put: ( each asString ) ]. ^ newCollection

CT-DataFrame

A minimal implementation (inspired by 'R') of a dataframe object. An intro can be found (in terms of 'R') here. The code can be found on github.

The original intent is to provide a means of filtering / grouping data and (by providing a suitable set of methods) have an interface that other services can make use of - for example - charting / visualizations.

In this initial implementation the dataframe takes a set of row objects (dictionary objects - from CTDBx or CTCSV) as an OrderedCollection and stores these in the instance variable dataset. Various select methods can then be used to filter the data into a resultset - the resultset is then used to summarize the data or perform specific calculations on specific fields - mean / sum / standard deviation (more will be added). Some rudimentary grouping functionality does also exist. The data (filtered / grouped) can also be returned as a JSON string - in the series instance variable.

To load data into the dataframe on the basis of a database query :-

| df q r |

df := CTDataFrame new.

q := CTDBxQuery new.

q queryTable: 'CTDBxTableIncome'; dbSearch: { { #year -> 13 } }.

r := CTDBxResult new.

r conn: ( UDBCSQLite3Connection on: '/Users/richardpillar/temp/csv.db').

r conn open.

r processSearchQuery: ( q queryString ) with: q queryTableObject dbTableFields.

r conn close.

df dataset: r result.

First step - select the data that you want to work with - selectAll / selectEquals: ...

df selectEquals: 'Year' with: 13.

Get a mean.

df selectAll; mean: 'Takings'.

Group data - by 'Month' for example and then calculate a

df groupBy: 'Month'; groupByMean: 'Takings'.

Summarize data - return max / min / standard_deviation / mean for each field.

df summarize.

Summarize data by group.

df groupBy: 'Month'; groupSummarize.

It is also possible to return series data that could be used as input into some charting software - within the _resultset_.

df seriesDataWith: 'Takings'.

Or within a _groupset_.

df seriesGroupDataWith: 'CustNumbers'.

It is also possible to amend a column's value using a regex.

| r | r := 'T' asRegex. df transformColumnData: 'Day' with: r replacingMatchesWith: 'X'.

Similarly you can add or remove a column to the dataframe.

df addColumn: 'Time' with: [ DateAndTime current ]

df removeColumn: 'Name'.

It is also possible to load data into the dataframe from a csv file. In the example here we use a groupBy and pass the result into a JSON string.

| csv df | csv := CTCSV new. df := CTDataFrame new. csv loadFromCSVFile: '/Users/richardpillar/temp/income.csv'. df dataset: csv data; selectEquals: 'Year' with: 13 and: 'Month' with: 1; groupBy: 'Week'; groupsetToJsonString.

CT-DBx

A simple database interface enabling row-data to be retrieved and stored as collections of dictionary objects. The code can be found on github.

There are two main objects - CTDBxQuery and CTDBxResult. A custom DSL is used to express the query that you might want to perform - for example :-

q := CTDBxQuery new.

q queryTable: 'CTDBxTableCars'; dbSearch: { { #name -> 'Richard' . #age -> 21 } . { #name -> #( #orderby desc ) . #limit -> 1 } }.

q inspect.

This will produce the following SQL query :-

select model,year from cars where name='Richard' and age='21' order by name desc limit 1;

Various helper methods are provided such as

This means that you can run the database queries in the following way :-

| rs q | rs := CTDBxResult new. rs conn: ( UDBCSQLite3Connection on: '/Users/richardpillar/temp/test.db' ). rs conn open. q := CTDBxQuery new. q queryTable: 'CTDBxTableContacts'; dbSearchAll. rs processSearchQuery: q queryString with: q queryTableObject dbTableFields.

The result field (in the CTDBxResult object) will hold a collection of dictionaries representing the table rows that have been retrievd.

There has also been an attempt to make it possible, within a CTDBxResultTable*, to express relationships between tables. It should be noted that this is only an early attempt (and may change). One disadvantage is that each relationship is expressed as a separate query rather than as a join. Here is an example method where we are performing a query to retrieve performance data based on the current data within a CTDBxResultTableCars object. :-

rPerformance

| rs |

self query queryTable 'CTDBxTableSchemaPerformance'; dbSearch: { { #name -> self model} }.

rs := CTDBxResultTablePerformance new.

rs conn: self conn.

rs resultset: ( rs procesSearchQuery: query queryString ).

^ rs.

For more info on this package please see the dedicated CTDBx page here.